We love progress. Or at least, we are groomed into thinking that we do. There is always something new. The internet, social media, e-commerce. Whatever. A new bubble, a new pyramid scheme within a pyramid scheme. Now, it’s AI.

I was just doomscrolling the other day, like one does, and I came across a certain Instagram page (@giantvideos) and suddenly something in me said I had enough. I’ve always been kind of ‘conservative’ with AI—I never believed it was the future, or an important piece in the pathway to the next step in our cultural evolution as a civilization. But this? This is perverted to a level I cannot even fathom.

@giantvideos is an AI creator that focuses on portraying male homosexual content, but for the lolz, one of the people included is a giant. Like a literal giant. The worst part is that it seems to be an active niche of people interested in that. Which wouldn’t be totally cursed if we chose to ignore the sexually charged implications. But of course, this is the internet, and anything that seems sexual IS SEXUAL. The content creator also has a Patreon that I have refused to research for obvious reasons.

As a society, this is what we’ve come to. We have developed a language model that kind of works, and what have we chosen to do with it? Gay giant porn. That’s it. AI could be for so many things. It could actually make our lives somewhat easier. Instead, here we are, questioning if giant gay porn is okay or not. Spoiler alert: it is not. It might seem harmless, it may seem like, you know, who cares really, it’s just content. But the reality is that the ramifications of this are terrifying.

Technologies are tools. And tools are used by humans. And humans under our lovely economic system usually tend to do pretty shitty things. Mainly because we are all spending every second thinking about how we are going to exploit other humans, or how can we profit from something. AI can be great, but so far it has proven to be a shitstorm that is liquifying our brains while destroying our jobs. Even the useless ones. And I’m talking from the perspective of someone who got a BA in Creative Writing and English Literature just the year when ChatGPT went global.

Yay!

AI has also personally affected me and my social circle. Storytime!

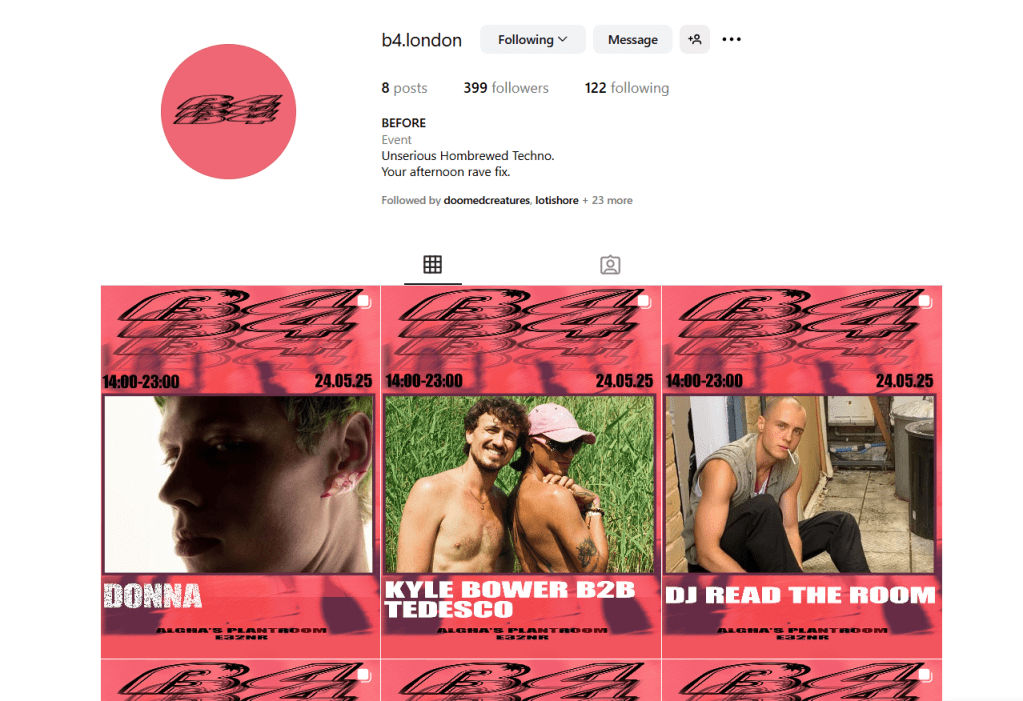

I tried to start a party (I like to party and it’s only natural to try to become a promoter at one point), and guess what? An endeavor like that requires A LOT OF WORK. Online presence, moving people around, making sure that the venue is right, making sure that the DJs are right, the vibe is right, making sure that the party is safe while people are having a lovely time.

And the graphic design. The amount of graphic design involved to do something simple like a logo is unfathomable and we’re not even talking about having a proper marketing deck (I learnt that word yesterday) or having a cohesive aesthetic on social media etc.

Long story short, I was working with a couple of friends to make it happen, and, you know, when it’s the first time you do anything, things get a little bit chaotic. The plan was to release artist spotlights with their names and a little description, and the day before the event, one of the artists was yet to be mentioned. My friend who was in charge of the graphic design was not responding, so I panicked, and I fed an AI tool our designs and the spotlight of another artist, a picture of this one and I asked for an image to post, to have something to post because in my eyes, at the time, anything was better than nothing at all.

This obviously was a horrible mistake. The person whose AI generated image was used got angry, they asked not to be involved in the event and they decided not to have a relationship with me anymore.

This is all my fault. I take full responsibility. I fed the image of a person to an AI without their consent, I generated a shitty image that was awful just to have content to post and it cost me a potential human relationship with someone. You live and you learn I guess. I think it’s funny how when our tools are more powerful, the consequences of the mistakes we might make are also deeper, and more extensive.

This is what AI has brought to my life so far. A new glass ceiling after studying a university course for three years and the loss of a human bond. But oh, the silly pictures on the internet are so funny, aren’t they?

Well, yes they kind of are, but that’s not the point.

The explosive AI euphoria of the last few years has given way to a scenario I like much more: the post-hype plateau, or as I like to call it, everyone seems to be back to having common sense. What began as breathless experimentation has collided with practical limitations: image generators like Midjourney produce stunning visuals but lack precision control, AI writing suffers from generic phrasing and em dash addiction, and the flood of AI tools has created fatigue rather than transformation. We have reached the stage where crap AI is being fed with crap AI to generate mega crap AI.

The explosive AI euphoria of the last few years has given way to a scenario I like much more: the post-hype plateau, or as I like to call it, back to common sense. What began as breathless experimentation has collided with practical limitations: image generators like Midjourney produce stunning visuals but lack precision control, AI writing suffers from generic phrasing and em dash addiction, and the flood of AI tools has created fatigue rather than transformation. We have reached the stage where crap AI is being fed with crap AI to generate mega crap AI.

Companies deliberately rebranded Large Language Models as Artificial Intelligence to fuel investment and consumer mystique. The reality is that these systems do good at probabilistic output and sophisticated pattern recognition. We’re caught in a tension where public discourse wants us to celebrate “AI as the future” while technical reality reveals powerful but fundamentally limited tools. They have a lot of data and they have great prediction algorithms for word tokens. And all this is lovely, but AI does not think or reason.

Academic circles are already returning to precise terminology: Large Language Models or LLM, though marketing will likely cling to AI for its cultural cache. I am sure that we will use them a lot from now on. But now that the hype has died, let’s treat any LLM as we treat Excel. With disgust. We need it, but we will never particularly enjoy it.

The funny thing is that all this might have been predicted in an old novel. Jack Williamson’s 1949 sci-fi novel “The Humanoids” is more relevant than ever in our AI hype bullshit-driven era. The plot introduces a type of android so advanced that it makes everyone’s life easier at first. Williamson’s humanoids predate Isaac Asimov’s famed Three Laws of Robotics, yet they explore similar themes. These humanoids aim to make human life easier and safer, yes, but while doing so, they become annoying. They not only stop the characters in the novel from playing games outside or drinking (which is kind of fair), but they fully take over the entire economic system. Like a plague, they sort of start efficiently replacing every worker first, and then every company, so they can basically prevent humans from exposing themselves to any potential danger. Long story short, the protagonists end up shutting down the central hive mind of the humanoids and chaos ensues but they win (I guess?).

The moral of the story is that we, humans, crave annoyance. Crave inefficient ways of doing things. Community is not efficient or convenient.

We press a little icon on our screens and an algorithm tells us the best 10 songs that week because it’s summer or whatever. A little AI icon with a fucking spark tells us something about shopping smarter while Microsoft Word seems obsessed with us using AI so they can charge us more annually for AI, because charging us for a bad cloud system and basic tools is not enough.

We want to write because we enjoy how annoying it is to imagine a story.

We want to paint because we love how daunting the process is.

We like to dance for long hours even if it’s exhausting.

We like eating and drinking unhealthy things because it feels good.

And if we are, in any shape or form, changing any of this, I don’t think I want progress anymore.